Intraoperative Navigation and Localization of Multimodal Sensors

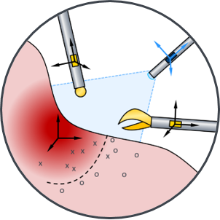

Navigation and orientation during a surgical procedure are crucial for the localization of sensor data and the automated documentation of removed tissue samples. For this purpose, a synthetic bladder model based on intraoperative camera images is being developed to create a patient-specific framework that combines all the data collected during the procedure.

Scientific Question

The interconnected vascular structures in the bladder provide reliable orientation information that enables accurate mapping, even with varying filling levels or changes in the shape of the bladder. This information is to be extracted and used for pattern recognition and orientation. Particular attention is paid to the robustness and real-time capability of the algorithms in order to recognize structures consistently across different interventions. A patient-specific, geometric representation of the organ is being developed, which already maps deformation-related effects and serves as the basis for comprehensive multiphysical tissue modeling and sensor fusion in project B3.

Methodology and Solution Approaches

In order to meet the requirements of the intraoperative environment, robust landmark information and adapted mapping algorithms must be developed. For each graph node, point-specific feature spaces should be determined in addition to the structural descriptive information of the graph. Conventional methods based on the features of neighboring pixels are insufficient due to intraoperative challenges such as varying brightness values, reflections, and deformations. Therefore, additional information is required to describe the feature space better.

Building on the proven feature description of landmark nodes, the final task is to detect related structures. In addition to the recovered features, it must be determined which structures were recognized in the current observation that are also captured in the global representation and which structures are captured in the global structure but no longer appear in the current observation.

Franziska Krauß

M.Sc.PhD Student B1

Carina Veil

Dr.-Ing.

Oliver Sawodny

Prof. Dr.-Ing. habil. Dr. h.c.Sprecher des GRK 2543